Riding the surge of hype around ChatGPT and other artificial intelligence products, Nvidia introduced new chips, supercomputing services and a raft of high-profile partnerships on Tuesday intended to showcase how its technology will fuel the next wave of AI breakthroughs.

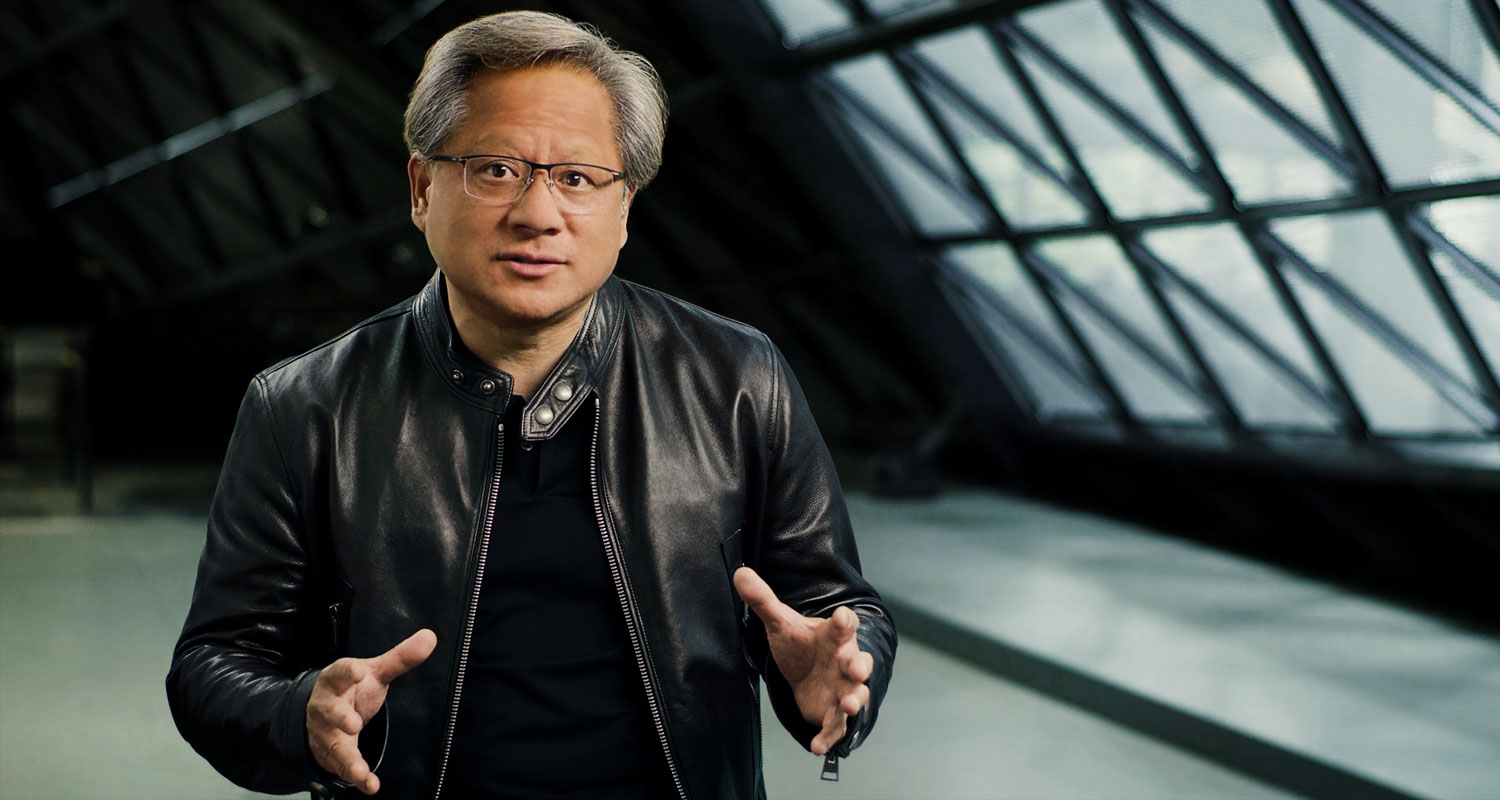

At the chip maker’s annual developer conference on Tuesday, CEO Jensen Huang positioned Nvidia as the engine behind “the iPhone moment of AI”, as he’s taken to calling this inflection point in computing. Spurred by a boom in consumer and enterprise applications, such as advanced chatbots and eye-popping graphics generators, “generative AI will reinvent nearly every industry”, Huang said.

The idea is to build infrastructure that can make AI apps faster and more accessible to customers. Nvidia’s graphics processing units have become the brains behind ChatGPT and its ilk, helping them digest and process ever-greater sums of training data. Microsoft revealed last week it had to string together tens of thousands of Nvidia’s A100 GPUs in data centres in order to handle the computational workloads in the cloud for OpenAI, ChatGPT’s developer.

Other tech giants are following suit with similarly colossal cloud infrastructures geared for AI. Oracle announced that its platform will feature 16 000 Nvidia H100 GPUs, the A100’s successor, for high-performance compute applications, and Nvidia said a forthcoming system from Amazon Web Services will be able to scale up to 20 000 interconnected H100s. Microsoft has likewise started adding the H100 to its server racks.

These kinds of chip superclusters are part of a push by Nvidia to rent out supercomputing services through a new program called DGX Cloud, hosted by Oracle and soon Microsoft Azure and Google Cloud. Nvidia said the goal is to make accessing an AI supercomputer as easy as opening a webpage, enabling companies to train their models without the need for on-premises infrastructure that’s costly to install and manage.

Pricing

“Provide your job, point to your data set, and you hit go — and all of the orchestration and everything underneath is taken care of,” said Manuvir Das, Nvidia’s vice president of enterprise computing. The DGX Cloud service will start at US$37 000 (about R684 000) per instance per month, with each “instance”— essentially the amount of computing horsepower being rented — equating to eight H100 GPUs.

Nvidia also launched two new chips, one focused on enhancing AI video performance and the other an upgrade to the H100. The latter GPU is designed specifically to improve the deployment of large language models like those used by ChatGPT. Called the H100 NVL, it can perform 12 times faster when handling inferences — that is, how AI responds to real-life queries — compared to the prior generation of A100s at scale in data centres.

Read: Nvidia in big push into quantum computing

Ian Buck, vice president of hyperscale and high-performance computing at Nvidia, said it will help “democratise ChatGPT use cases and bring that capability to every server and every cloud”. — Austin Carr, (c) 2023 Bloomberg LP