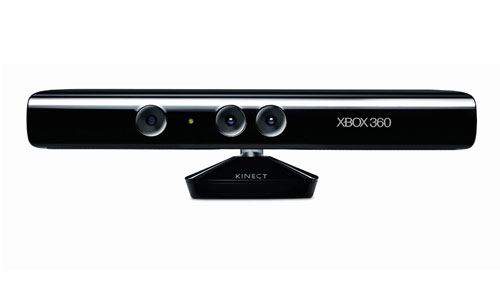

Kinect, Microsoft’s remote game console that sits in the corner of the room and registers a user’s intentions from his gestures, will be the shape of things to come if Chris Harrison, a researcher at the Human-Computer Interaction Institute at Carnegie Mellon University has his way. Harrison thinks Kinect’s basic principles could be extended into a technological panopticon that monitors people’s movements and provides them with what they want, wherever they want it.

Someone in a shopping mall, for example, might hold up his hand and see a map appear instantly at his fingertips. This image might then be locked in place by the user sticking his thumb out. A visitor to a museum, armed with a suitable earpiece, could get the lowdown on what he was looking at simply by pointing at it. And a person who wanted to send a text message could tap it out with one hand on a keyboard projected onto the other, and then send it by flipping his hand over.

In each case, sensors in the wall or ceiling would be watching what they were up to, looking for significant gestures. When they noted such gestures they would react accordingly.

Harrison’s prototype for this idea, called Armura, started as an extension to a project he carried out at Microsoft. This was to project interactive displays onto nearby surfaces, including the user’s body. That project, called OmniTouch, combined a Kinect-like array of sensors with a small, shoulder-mounted projector. Armura takes the idea a stage further by mounting both sensors and projector in the ceiling. This frees the user from the need to carry anything, and also provides a convenient place from which to spot his gestures.

The actual detection is done by infrared light, which reflects off the user’s skin and clothes. A camera records the different shapes made by the user’s hands and arms, and feeds them into a computer program of Harrison’s devising. This program uses statistical classification techniques to identify different arrangements of the user’s arms, hands and fingers, such as arms-crossed, thumbs-in, thumbs-out, book, palms-up, palms-down and so on.

According to Harrison, the hands alone are capable of tens of thousands of interactions and gestures. The trick is to distinguish between them, matching the gesturers intention to his pose precisely enough that the correct consequence follows, but not so precisely that slightly nonstandard gestures are ignored. That is done by trial and error, using what is known as a machine-learning algorithm.

The result is that if someone holds his hands out like a book, information is displayed on each palm as if that palm were a page. Folding his hands turns the page. Arm movements will reveal the locations of particular exhibits or stores. And if someone fancies a bit of background music, the appropriate hand and arm movements will control which track is played and at what volume. With clever use of microphones and directional loudspeakers, indeed, it may even be possible to make phone calls.

Of course, Armura will only work where there are sensors. But then, so do mobile phones. It is a slightly disturbing thought that if Harrison’s technology can be made to operate routinely, such ubiquity might emerge and the world’s streets and train carriages will be filled with people making odd gestures at no one in particular, hoping, as they do so, for enlightenment. — (c) 2012 The Economist![]()

- Subscribe to our free daily newsletter

- Follow us on Twitter or on Google+ or on Facebook

- Visit our sister website, SportsCentral (still in beta)