On Monday last week, Richard Poplak, in his Trainspotter column on the Daily Maverick website, wrote a funny but scathing rant about the two Zuma sons, Edward and Duduzane (or is that Duduzani?). By Tuesday his Twitter timeline was clogged with insults and defamatory memes from a bunch of anonymous Twitter trolls. Talk to any South African journalist reporting on the relationship between the Guptas and President Zuma or his acolytes and they’ll tell you a similar story. Abuse from the so-called Guptabots is the new normal.

The use of social media to drive political messaging and propaganda is common lately, and state-sponsored botnets have been accused of assisting to get Trump elected in the US, trying to paint the Ukrainian leadership as neo-Nazis, threatening pro-democracy activists in Eritrea, or simply massaging the ego of Paul Kagame in Rwanda.

In addition, there is a flourishing commercial trade in fake accounts, purchased to bolster follower numbers on Twitter and Instagram (where “influence” has some value to marketers), or simply to be used as spambots.

On the Indian subcontinent, where labour is relatively cheap and computer literate, there are businesses set up to generate fake accounts and then sell or rent them. A simple Google search delivers hundreds of options. Over the past two years, we’ve seen the use of these commercial “follower farms” being used to drive content from fake news sites.

The first examples of this were some of the fake news spread around the US election in 2016, much of which was traced back to Macedonian teenage entrepreneurs who abused the credulity of the Republican Party supporters on Facebook and Twitter. By using fake accounts to seed links to their websites, they could make money from advertising on the fake news sites.

But the South African situation is a little different. In most of the examples above, there are single motives: either the propaganda is used to push an agenda (such as Russia good, Ukraine bad; or Hillary Clinton is corrupt; or Paul Kagame is the saviour of Rwanda), or the fake accounts are purely commercial (spam, links to advertising-supported fake news, or just followers for sale). Locally, we see a combination of the two: propaganda not only to support the Zuma faction in the ANC, but also to protect what is alleged to be the corrupt business practices of the Guptas and their cronies.

#dumbpoplak

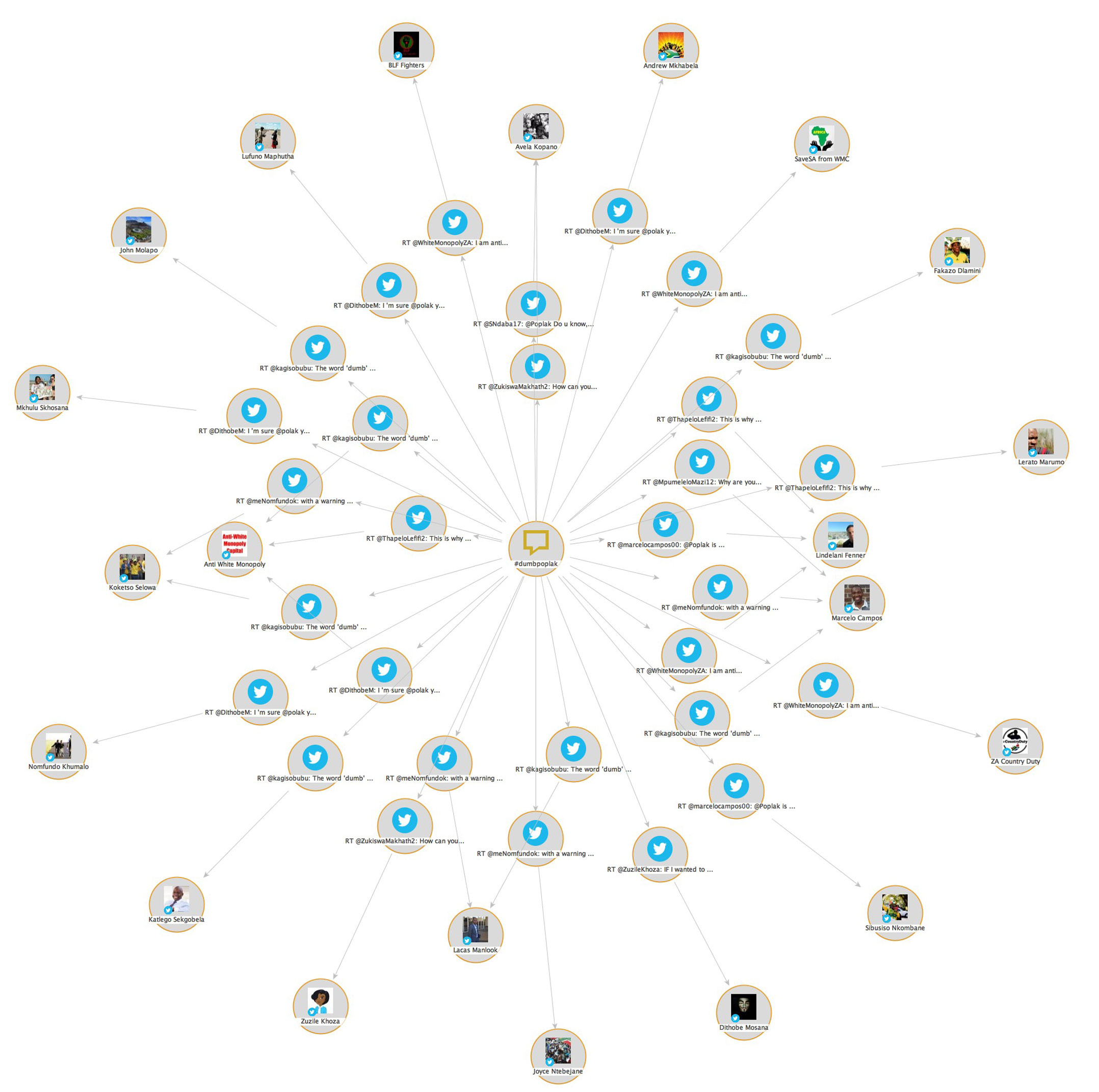

For analysis, the attack on Richard Poplak is a good example, because, until Monday’s column, the sockpuppets had largely ignored him, plus they created a unique hashtag, #dumbpoplak (along with some extremely derogatory ones). All of which helps to identify the tweets.

Over a two-hour period on Tuesday, 29 August, 21 accounts tweeted at the @poplak Twitter handle using that hashtag (and at the completely unrelated and seemingly innocent @polak account — nobody can claim that the guptabots have good attention to detail). Of those 21 accounts, all appear to be fake. All seem connected to the WMCLeaks.com fake news website, which investigations have shown is based and managed from India.

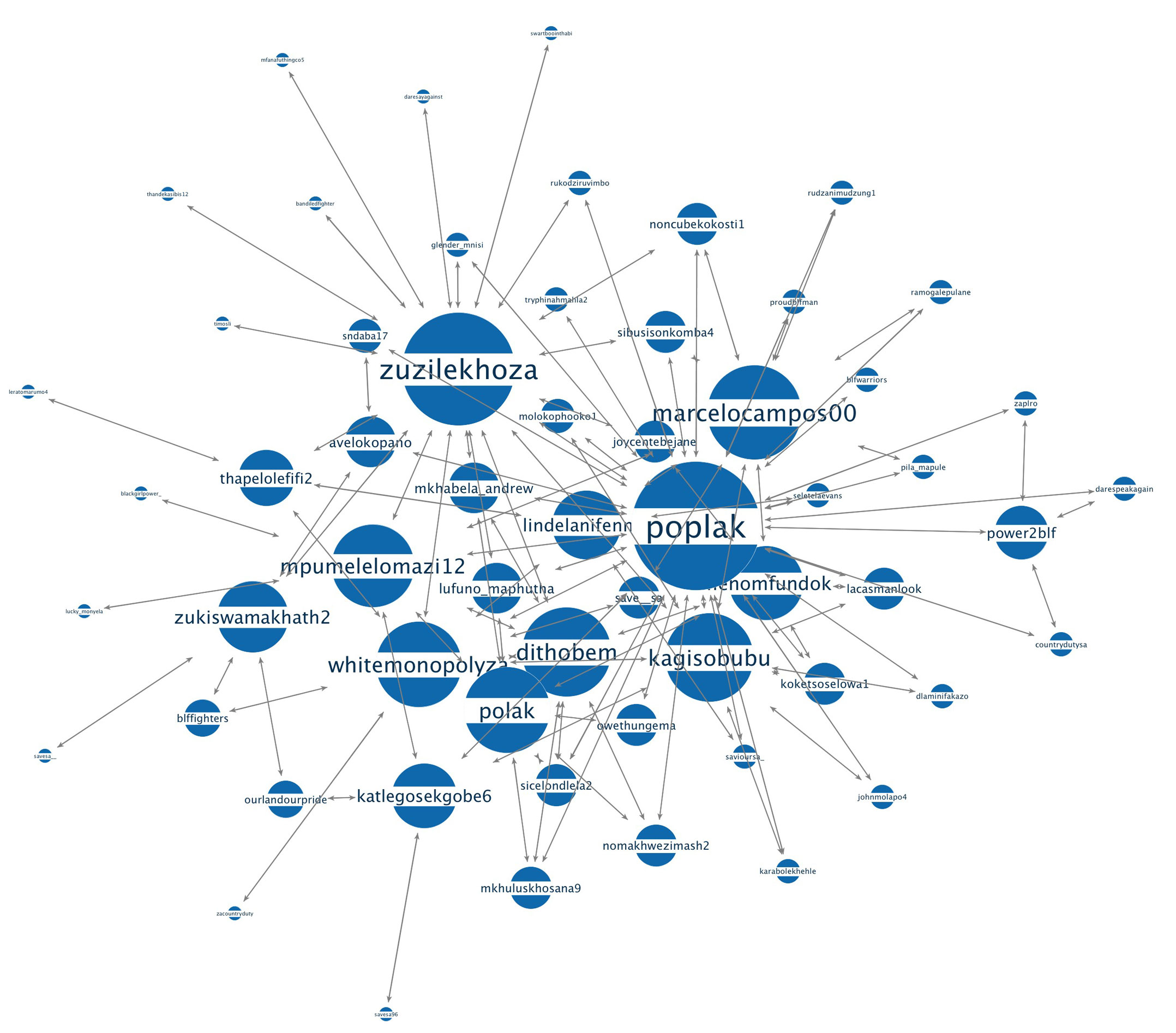

If we map the use of that hashtag, it is plain to see the use of multiple fake accounts to drive a particular message (see figure 2). It is also possible to map the affiliations of the tweets to see the accounts that are most active in driving the hashtag (see figure 3). The @poplak (and @polak) accounts are shown as having high affiliation because the tweets were directed at them — but the @zuzilekhosa, @marcelocampos00, and @kagisobubu accounts were significant in spreading the hashtag. All three of these accounts have long been suspected of being sockpuppets for Gupta-aligned actors.

The first signs of this Gupta-linked online propaganda were visible in early 2016 when a website and associated social media accounts were set up to tell the “Truth by Connor Mead”. The site was an obvious PR exercise by the Guptas — Connor Mead seemingly does not exist, and the content of the site, right down to the headline, was used as a double-page advert in The New Age newspaper (at that stage owned by the Guptas). The setting up of this site coincided with the appointment of Bell Pottinger as spin doctors for the Gupta empire. A small number of sockpuppet accounts tried to drive the website narrative but largely unsuccessfully. It has since been shuttered, although the Twitter account, @kjbjohn832, is still open, but dormant since September 2016.

Real growth of activity of the social media fake troll network was first identified in November 2016, the key theme seeming to be that former public protector Thuli Madonsela’s “State of Capture” report was incorrect and biased. Jean le Roux, an analyst, identified around 100 accounts that were tweeting around this certain theme. He then demonstrated that many of the accounts were automated (tweeting identical content within seconds of each other) and identified certain “control” or seeding accounts that were supplying the content for retweets. The “white monopoly capital” theme is visible in the tweets.

Another social media researcher had been seeing similar activity, and before the community was identified as fake, Superlinear’s Kyle Findlay had highlighted a strange convergence between tweets from Andile Mngxitama’s Black First Land First (BLF) community and those of The New Age and ANN7 media companies. Kyle was also the first to identify the white monopoly capital theme and to attempt to map its usage. Independently, two researchers had isolated a similar phenomenon — what appeared to be a botnet of pro-Zuma, pro-Gupta fake accounts driving a narrative that Pravin Gordhan, Thuli Madonsela and Mcebisi Jonas were stooges of “white monopoly capital”, most particularly of Johann Rupert.

The first quarter of 2017 continued in the same vein, but after the cabinet reshuffle in March, a new battery of fake news sites, along with a new army of fake accounts began an assault. The WMCleaks.com and WMCScan.com websites were created to provide content for a huge number of Twitter sockpuppets.

At the same time, similar activity was attempted on Facebook, but with nowhere near the same reach. Creating fake Facebook accounts, while not impossible, is significantly more complex than on Twitter and this can be seen by the minimal sharing and traction these Facebook accounts generate. Most of the engagement on Facebook took the form of negative comments by real South Africans — probably not what was planned.

There do seem to be two major nodes of these fake accounts. The WMCLeaks node is huge and likely based out of India. The few accounts that actually type content in their tweets tend to use a style of English that is foreign to South Africans. There are unusual grammatical errors and these accounts never tweet in anything but English, even if engaged in other South African languages.

The other is older and likely controlled from within South Africa and by South Africans (Kyle Findlay identifies this as the “BLF node”). These fake accounts tend to retweet anything that comes from local BLF spokespeople or leaders and local blogs like blackopinion.co.za, as well as distributing defamatory memes. Recently, this node has begun to retweet content from Nkosazana Dlamini-Zuma’s Twitter feed. Due to the type of content distributed by real BLF supporters, it is more difficult to differentiate the fake accounts from real supporters.

There is crossover in content between the two networks, with both appropriating content from the other, and it does seem that they have similar motives, if they are, in fact, not part of the same organisation.

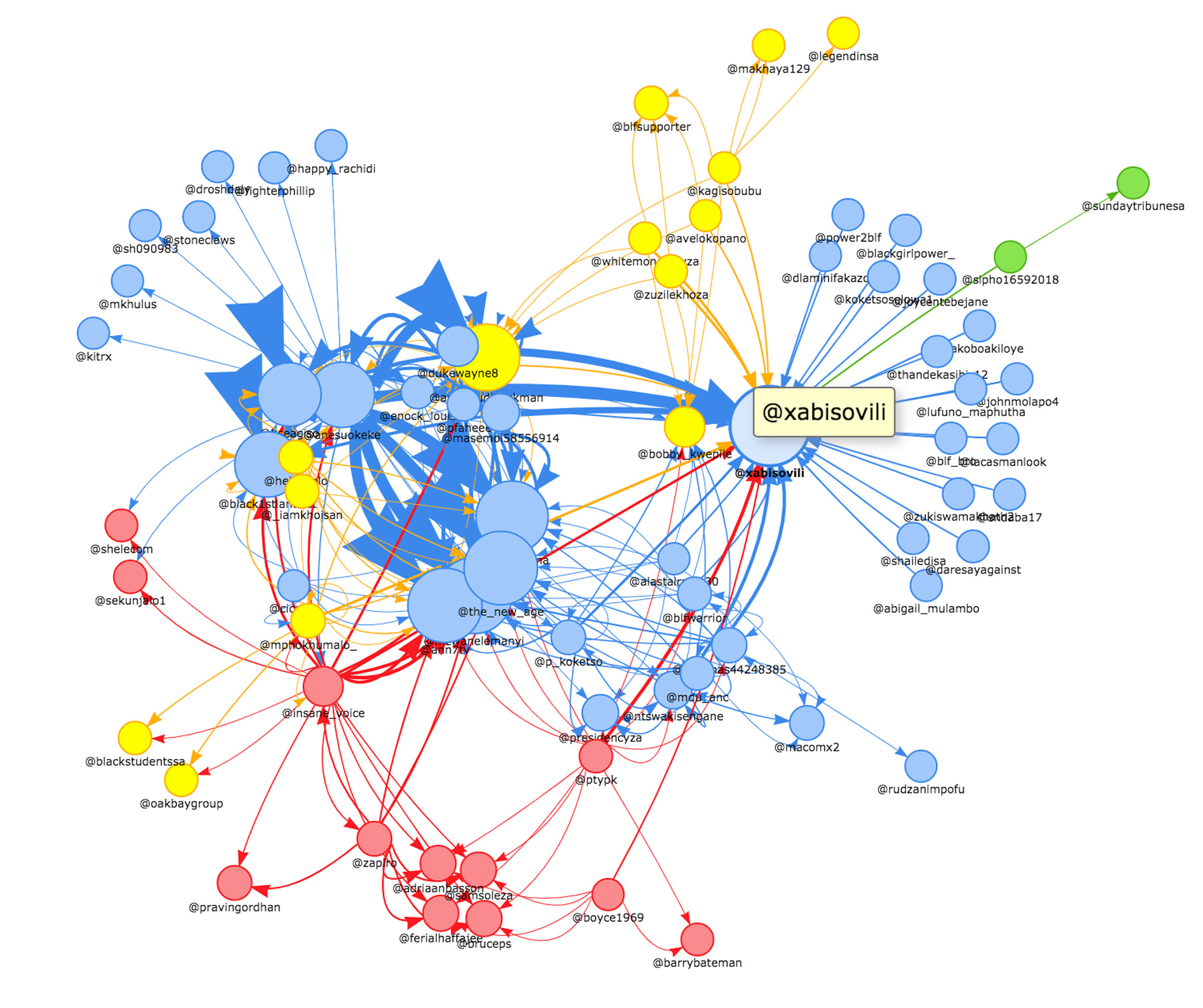

Figure 5 is a messy map of the various tweet connections by what is suspected to be a local master account, @XabisoVili. Much of the content is distributed by a group of sockpuppets (blue, upper left, and upper right), but there is significant tagging and retweeting of content from Mzwanele Manyi, Andile Mngxitama, ANN7 and The New Age Twitter accounts. The yellow portion is suspected Indian-controlled activity with some recurring master accounts, @makhaya129, @ZuzileKhosa and @avelokopano. Included in both groups is a small number of real accounts that interacted with the sockpuppets. The red portion of the map mostly consists of accounts that have been targeted by the fake accounts.

The motive for this activity isn’t completely clear and we can only judge it by the activities. There does seem to be a clear group of targets of these Guptabots: anyone opposed to the Zuma cabal in the ANC, along with anyone highlighting the activities of the Gupta family or their associates. This extends to attacking critics of Eskom, Brian Molefe, Hlaudi Motsoeneng and Manyi. There is high praise from the fake accounts for people like Mngxitama and mineral resources minister Mosebenzi Zwane, not to mention presidential hopeful Nkosozana Dlamini-Zuma.

What the accounts intend to achieve is less clear. This kind of mass propaganda work is used in countries like China to overwhelm the discussion space, a way to obfuscate the conversation. But in South Africa, this isn’t really happening; the size and competence of the network doesn’t allow for that. This network is using minimal automation, only using tools like TweetDeck to auto-retweet content (and that, mostly, is to an echo chamber of sockpuppets). Possibly the idea is to use mass retweeting to get hashtags to trend, but if that is the plan, they have been largely unsuccessful.

Often, we see that tweeted images are tagged with the names of the targeted people (for example, prominent investigative journalists or prominent politicians) to spam their timelines, but that is easily thwarted by adjusting Twitter’s settings. Likewise, direct tagging of Twitter handles in posts is thwarted by simply blocking (or better, muting) the accounts.

Doomed to fail

If the aim is to show that some kind of mass popular movement exists, it is doomed to fail. The network is simply too unsophisticated for that. Most of the fakes are so easily identified that they have no credibility. If anything, lately, they have become a target of derision. But there doesn’t seem to be any reduction in activity, so there may well be an objective that isn’t apparent.

One final point that must be noted is that Twitter’s abuse controls are woefully inadequate. Many, if not most, of these accounts are easily identified as fake by simple metrics like the time that the accounts were created, multiple accounts using identical mobile numbers or email addresses for registration, the kind of content that they post, the fake profile images, the rapid retweeting by multiple accounts of identical content. Many of these can be automatically identified, and, if Twitter had the will to shut down fake accounts, I’m sure that they would be. But Twitter seems less than willing to institute these kinds of measures. — (c) 2017 NewsCentral Media

- Andrew Fraser is an independent marketing consultant