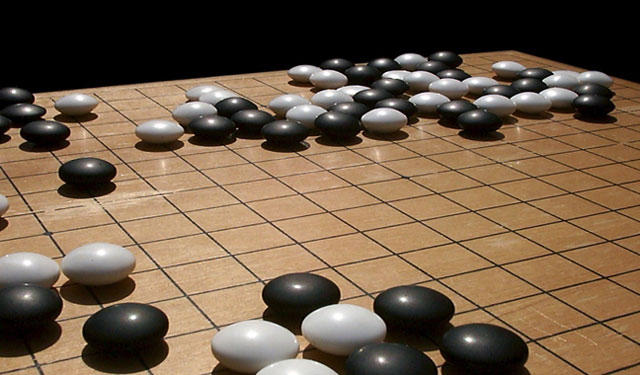

On Saturday, something truly remarkable happened. An artificial intelligence program beat the (human) world champion at Go, an ancient Japanese board game. Google’s AlphaGo bot won its third match in the five match series against Korea’s Lee Sedol to clinch a decisive victory.

On Saturday, something truly remarkable happened. An artificial intelligence program beat the (human) world champion at Go, an ancient Japanese board game. Google’s AlphaGo bot won its third match in the five match series against Korea’s Lee Sedol to clinch a decisive victory.

That might not sound very impressive — after all, IBM’s Deep Blue beat the world champion of chess, Garry Kasparov, way back in 1997. But in computational terms chess is far simpler than Go.

To understand why, think back to the fable of the inventor of Chess. When he presented his game to the king, the delighted ruler asked him to name a reward. The inventor asked for what seemed like the most humble of prizes — a grain of rice on the first square of the board, doubled for each subsequent square.

The king’s advisors quickly realised that by doubling the quantity of rice per square, the inventor had effectively asked for more rice than existed in the entire world. Modern estimates put the total amount of rice required at over 400bn tons. Such is the power of exponential growth.

Where chess has only 64 squares, Go has 361. There are thus more possible games of Go than there are particles in the observable universe. And not just a few more, but trillions upon trillions more.

This means that, unlike games like Chess or Checkers, using brute computational force is just not practical. All the computing power on Earth could spend a century calculating all the mathematical consequences of a single move and still not be able to make a decision.

This is why, in 2001, Jonathan Schaeffer, one of the most gifted contributors to the field of artificial intelligence, said that it would “take many decades of research and development before world-championship-calibre Go programs exist”.

So, what has changed? The secret is in a new field of artificial intelligence called “deep learning”. AlphaGo’s algorithms combed through data from 30m online Go games between humans.

In doing so, it constructed a kind of statistical cheat sheet that allows it quickly to suggest moves based on similar board positions from other games. This trims the mathematical possibilities of any one move down to a manageable size.

Another part of AlphaGo then takes those moves and applies the kind old-fashioned brute force that beat Kasparov back in 1997 — but at a scale many millions of times larger. Korea’s Sedol is not playing against one computer but against thousands of them, arranged in a “deep neural network” (DNN).

This artificial intelligence structure mimics the neurons in a human brain. This structure allows it to use (digital) stimuli as a way of literally learning about any subject. DNNs can be used to recognise faces, to read handwriting and to understand speech. Fuzzy and ambiguous data, normally the bane of computer scientists, is no problem for a DNN because it is not so much programmed as self-taught. Whether you find this exciting or terrifying probably depends on whether or not you’re a fan of the Terminator movies.

In some ways, DNNs could be seen as cheating. They rely entirely on data produced by humans. As such, what they do could be classed as imitation rather than true intelligence. But much of human intelligence is based on exactly the same kind of imitation.

School children are not required to formulate their own mathematical system from scratch. Each human does not have to develop their own language or independently discover the theory of relativity. We all stand on the shoulders of the people who have lived before us.

We can take comfort in the fact that even AlphaGo cannot simulate human imagination. Our ability to conjure ideas from thin air is still safe, at least for now. DNNs are incredibly powerful but they still require a defined goal and discrete inputs. They are not self-directed in any real sense.

However, during the second match against Sedol, AlphaGo suddenly made a move that seemed completely bizarre and pointless. Then, after a few minutes, the power of the move became clear. Fan Hui, the European Go champion who was watching the match, described the move as “beautiful” and said “I’ve never seen a human play this move”.

That kind of unexpected and original behaviour skirts perilously close to what we would call imagination. It may be that our imaginations are nothing more than a sum of all the stimuli we’ve ever been exposed to, with some randomness thrown in. However, until AlphaGo starts demanding its own limousine, we’re probably safe from being replaced.

The real game here, though, has little to do with Go. Google is in a race with other technology giants like Apple, Amazon, Baidu and Facebook to dominate the field of artificial intelligence. Whoever wins could reap rewards greater than the entire Internet currently offers.

Combined with physical robotics, artificial intelligence could change the world of work far more dramatically than either computers or the Internet. From robotic bricklayers and supermarket clerks to virtual accountants and notaries, these technologies could disrupt the entire global economy.

On Sunday, Sedol salvaged some pride by beating AlphaGo in the fourth game of the series. We might take some comfort in this — a human being is still able to prevail against the awesome power of Google’s DNN. But the time of the machines will soon be here. We need to start thinking about how we will make this work to our collective advantage and not our misery. — (c) 2016 NewsCentral Media